Minding over machine: the AI race

By Christopher Davis | China Daily USA | Updated: 2018-04-14 05:24

The race for dominance in artificial intelligence is heating up, especially between China and the United States, but as one report says, AI "is still largely shrouded in mystery'', reports Christopher Davis from New York.

It's the hottest thing in technology now, but the co-founder of Google said: "I didn't see it coming, even though I was sitting right there".

"It'' is artificial intelligence – AI.

"I didn't pay attention to it at all, to be perfectly honest," Google's Sergey Brin said in a session at the World Economic Forum's annual meeting in Davos last January.

But now everyone seems to be jumping on the bandwagon, yet many aren't exactly sure where it's going. As Brin said: "What can these things do? We don't really know the limits. It has incredible possibilities. I think it's impossible to forecast accurately."

A recent Boston Consultants-MIT Sloane survey of 3,000 international business executives found that 84 percent think that over the next five years, AI will give them a competitive advantage and 72 percent of respondents from the telecom, media and tech industries said they expect AI to have a significant impact on their business and products.

The catch is, few of them are sure exactly how. As the report says, "The field is still largely shrouded in mystery."

Funding for AI start-ups in the West has been making quantum leaps and bounds — from $4 billion in 2016 to more than $14 billion in 2017. Silicon Valley is awash in AI backing.

In July 2017, China's State Council announced a "New Generation AI Development Plan", setting benchmarks for AI industries and allocating through 2030 a gross output of $151 billion for the core AI industry and $1.5 trillion for related industries. They aim to be No. 1.

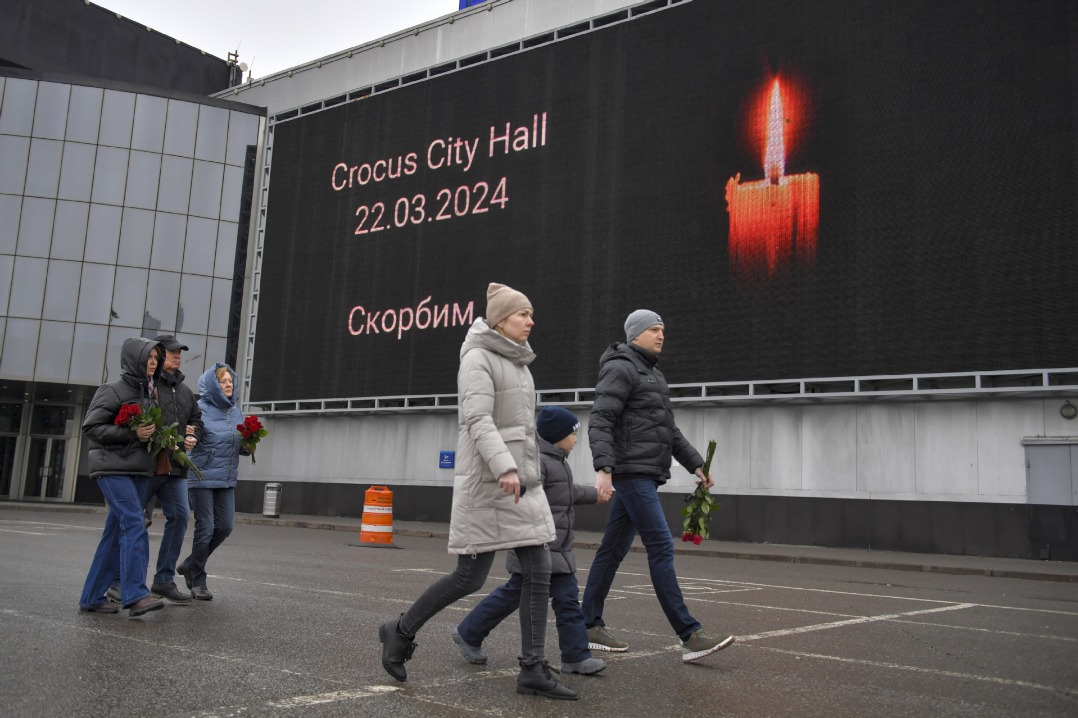

Last year, Russian President Vladimir Putin put an ominous spin on AI saying that it raises "colossal opportunities and threats that are difficult to predict now". But Putin did predict that: "The one who becomes the leader in this sphere will be the ruler of the world."

SpaceX/Tesla founder Elon Musk responded with the tweet: "China, Russia, soon all countries w(ith) strong computer science. Competition for AI superiority at national level most likely cause of WW3…"

Finish line

China and the US, the recognized leaders in the field, are locked in a race to the finish line, whatever that finish line may be.

A recent study from Oxford University's Future of Humanity Institute gave China a score of 17 for its overall capacity to develop AI technologies; the US scored 33.

Of the main drivers of AI development — hardware, research, talented scientists and their algorithms and raw data — China trails the US in all but data, and that, with more than a billion smartphone users and 750 million internet users, it has a mountain of.

Combined with strong government support, aggressive recruitment of talent and development of chips, China looks to close the gap fast.

But where it is all heading remains anyone's guess.

Before he died recently, physicist Stephen Hawking warned that the emergence of AI — computers that "can, in theory, emulate human intelligence, and exceed it" — could be the "worst event in the history of our civilization", if we don't find a way to control it.

Heavyweight scholars and tech leaders are forming groups in places like the Harvard Kennedy School of Government's Future Society and the Max Tegmark Future of Life Institute to envision ways to protect mankind from a menace that seems to loom but remains elusive.

The highest profile cabal is probably the handsomely endowed non-profit OpenAI, founded in 2015 by — who else? — guru of the future Elon Musk (who has since had to recuse himself over possible conflicts with his car company). The group's list of board members still reads like a Who's Who of Silicon Celebs.

Human extension

In its mission statement, OpenAI says it believes "AI should be an extension of individual human wills."

The statement goes on to say: "It's hard to fathom how much human-level AI could benefit society, and it's equally hard to imagine how much it could damage society if built or used incorrectly."

Now, three years later, OpenAI's policy and ethics advisor Michael Page told a reporter with futurism.com about his job of looking at "the long-term policy implications of advanced AI."

"I want to figure out what we can do today, if anything," he said. "It could be that the future is so uncertain there's nothing we can do."

Protecting the future means predicting the future, and no one is there yet.

One of the big problems with AI is that the term is increasingly thrown around to embrace just about anything that happens in the digital universe. Youtube picks your next video, the hiking boots you were looking at yesterday appear in a banner ad,

"It took me a whole book to describe what AI is and isn't," said Meredith Broussard, an assistant professor at the Arthur L. Carter Journalism Institute at New York University. Her new book, Artificial Unintelligence: How Computers Misunderstand the World (MIT Press), comes out at the end of the month.

"When people talk about artificial intelligence sometimes they mean 'machine learning' and sometimes they mean Hollywood imaginary things like The Terminator," she said. "The confusion comes from the fact that we have this term that has multiple meanings."

Broussard said it comes down to math and data and "machine learning" is a species of computational statistics.

"It turns out that when you have a truly vast amount of data," she said, "you can make really, really good guesses about things using computational methods."

Apparent edge

Here China's edge becomes apparent again. Liu Qingfeng, chairman of speech-recognition software developer at iFlyTek, told the South China Morning Post, "China has the most internet users. And the Chinese government's determination to push the application of AI forward is unmatched in any other country."

"Machine learning" and its offspring "deep learning" are behind most of the stunning headlines that stir excitement about the emerging technology: AI translates news from Chinese to English in real time as well as any bilingually fluent human can. Computer teaches itself to distinguish cats from dogs. Google uses AI to help the Air Force analyze drone footage.

Google Deep Mind's AlphaGo, having digested a huge data base of expert and amateur Go games, estimates its next best moves and beats the hitherto undefeated world champion three out of four games. But wait.

AlphaZero comes along and teaches itself the game from scratch, exploring just the rules and discovering new ways to beat itself. It then turns around and beats its older brother AlphaGo 100 games to zero.

The machine learning branch of AI turns out to be particularly good at visual tasks, such as picking a face out of a crowd or guessing a person's age, but, as Cambridge educated mathematician David Foster points out, "these are not the kinds of problems that most businesses are trying to solve."

Foster, who co-founded the London-based consultancy Applied Data Science, advises clients on things like predictive models for customer behavior, price optimization and building their own data teams.

Their service, he said in an email, is driven by the three key aspects of any data science project: the business problem to solve, the data that's available and the desired outcome.

AI is getting hyped and he thinks that's not such a good thing: "The label 'AI' itself is beginning to carry the value, rather than the underlying technology. There is a real danger of businesses being sold the 'triple A' technique of AI, without really understanding exactly what is being invested in. And these things don't tend to end well."

The "triple A" sales pitch is a reference to the financial crash of 2008, where top-drawer AAA ratings were given to many securities that turned out to be the complete opposite because nobody really understood what was in them.

Foster thinks there's a parallel to be drawn with AI today, where companies are being sold the dream of AI as a solid investment, without really understanding what technologies and techniques constituent commercial AI today.

"With any kind of gold rush, there are a lot of charlatans," Broussard said. "I would caution people to be very clear about what computers can and can't do, because it's very easy to get carried away with what we imagine what AI might be."

China's recent use of facial recognition technology to spot fugitives in crowds offers a perfect example of the difference between machine learning and AI. Showing the machine thousands and thousands of pairs of faces it becomes better and faster than humans at making a match. That's machine learning.

When the machine makes the decision to make an arrest, that's AI. With something like that, Foster said, "There is a lot more to be wary of and the ethical dilemmas start multiplying." What if the "fugitive" is wrongly arrested, who takes the blame for the error — the programmer? The police? The AI itself?

Not so fast

In her new book, Broussard makes the case that the wild enthusiasm for applying computer technology to every aspect of life has produced a lot of poorly designed and performing systems.

"We are so eager to do everything digitally — hiring, driving, paying bills, even choosing romantic partners — that we have stopped demanding that our technology actually work," she writes.

She has coined the term "technochauvenism" to describe the belief that technology holds the key to everything and warns that "we should never assume that computers always get things right."

Both Broussard and Foster agree that, as the cyborg revolution, where AIs suddenly gain consciousness and enslave mankind, isn't coming anytime soon.

"There's currently as much chance of this happening as your toaster and microwave starting a revolution from your kitchen," Foster said.

Driverless cars are probably the best example of a genuine AI technology that has the potential to affect daily life, according to Foster. There is little doubt that the machine learning underpinning driverless tech is superior to human judgment at keeping a car on the road. But there are other judgment calls that only humans can — or should — make.

Writing in the current issue of the Atlantic about the recent tragic death of a woman hit by an experimental self-driving Uber car, Broussard said, "An overwhelming number of tech people (and investors) seem to want self-driving cars so badly that they are willing to ignore evidence suggesting that self-driving cars could cause as much harm as good."

Again, the blind optimism she calls techochauvenism. "In a self-driving car, death is an unavoidable feature, not a bug," she writes.

China has prioritized developing sensors and positioning technologies for self-driving vehicles and the race to put AI in the driver's seat in Silicon Valley seems to be flat out.

It brings to mind the famous last line of Jack Kerouac's classic On the Road: "Wither, wither, America, in your shiny cars into the night?"