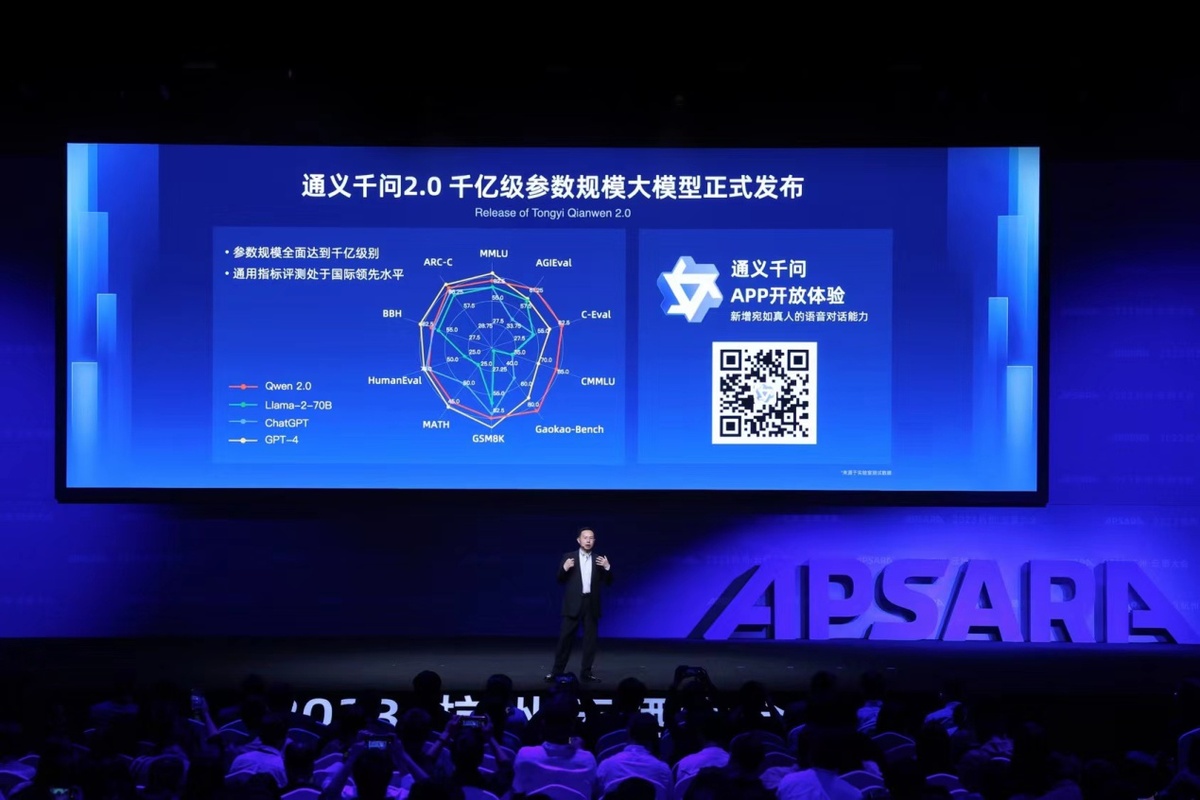

Alibaba Cloud unveils Tongyi Qianwen 2.0

By Fan Feifei | chinadaily.com.cn | Updated: 2023-10-31 20:45

Alibaba Cloud, the cloud computing unit of Chinese tech heavyweight Alibaba Group Holding Ltd, on Tuesday unveiled the latest version of its large language model, Tongyi Qianwen 2.0, and a suite of industry-specific models amid an intensifying AI race among tech companies.

Tongyi Qianwen 2.0 boasts a few hundreds of billions of parameters, a benchmark used to measure AI model power, and represents a substantial upgrade from its predecessor launched back in April, Alibaba Cloud said.

The model has demonstrated enhanced capabilities in understanding complex instructions, copywriting, reasoning, memorizing and preventing hallucinations.

"Currently, 80 percent of China's technology companies and half of large model companies run on Alibaba Cloud. We aim to be the most open cloud in the era of AI," said Joe Tsai, chairman of Alibaba Group at Alibaba Cloud's annual flagship tech event Apsara Conference.

"We hope that through the cloud, it will become easier and more affordable for everyone to develop and use AI, so we can help, especially small and medium-sized enterprises, to turn AI into huge productivity," he added.

He said Alibaba's AI model-sharing platform ModelScope features more than 2,300 models and 2.7 million developers, with over 100 million models downloaded.

Alibaba Cloud also released a series of industry-specific models to boost productivity across a wide range of industries, ranging from customer support, legal counseling, healthcare, finance, document management, and audio and video management.

"Large language models hold immense potential in revolutionizing industries. We're committed to using cutting-edge technologies, including generative AI, to help customers capture the growth momentum forward," said Zhou Jingren, CTO of Alibaba Cloud.

To help businesses better reap the benefits of generative AI in a cost-effective way, the company is launching a more powerful foundation model as well as industry-specific models to tackle domain-specific challenges, Zhou said.

He said they plan to open-source its 72 billion-parameter version of Tongyi Qianwen later this year. It has already open-sourced LLMs with 7 billion parameters and 14 billion parameters.